- +1-315-215-1633

- sales@thebrainyinsights.com

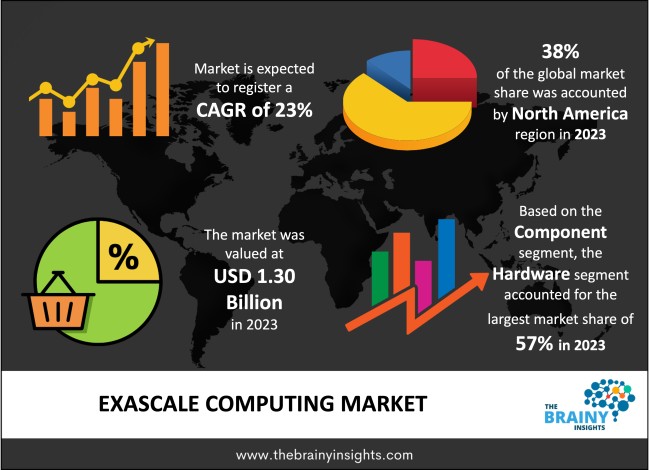

The global exascale computing market was valued at USD 1.30 billion in 2023 and grew at a CAGR of 23% from 2024 to 2033. The market is expected to reach USD 10.30 billion by 2033. The rising volume of data will drive the growth of the global exascale computing market.

Exascale computing defined as computing system capable of running at least one exaflop or 1018 flops, where flop stands for floating point operations or FLOPS. This is an unbelievable quantum jump in computing capability is a major advance beyond present Peta Scale systems that process data at a rate of 1015 FLOPS. Exascale computing plays a crucial role of solving different scientific, engineering, and data up intensive problems. Exascale computing is expected to provide substantial improvements in the capacity to address various kinds of grand challenges in areas of science and engineering such as climate, chemistry, biology, and astronomy. Exascale systems will help researchers get through high-resolution modelling and simulation and big data analysis, faster, thus enabling speedy innovation. Exascale computing can be considered as a qualitative leap in the global scale of high-performance computing capabilities that will have a significant impact on research and industries by offering the unlimited computational power.

Get an overview of this study by requesting a free sample

The growing high-intensity computational needs – The main applications for exascale computing are defined by the rising computational requirements from the accelerated pace of data generation. The amount of data produced is increasing at a rate which is nearly doubling approximately every two years. Furthermore, the influx of AI and ML technologies has enhanced the need for better computational capabilities. A need for training free algorithms faster in AI has prompted the demand for exascale systems. With more and more companies adopting data-oriented strategies, the capacity to process big volumes of information in real-time becomes vital in order to achieve competitiveness and optimization of performances. Therefore, the growing high-intensity computational needs given the ever-increasing data generation will contribute to the global exascale computing market’s growth.

The high costs of exascale computing – Creating exascale systems would need the best of hardware that can provide robust performance. This is followed by processors, and memory systems, alongside niche tech like Graphic Processing Units (GPUs) and Field Programmable Gate Arrays (FPGAs), which are costly. In addition, the kind of sophisticated cooling systems, high power outlet connection mechanisms, appropriate data centres infrastructure etc adds to the costs. Other challenges are associated with software development costs. Designing fast software and adaption of the exascale systems is not an easy task as it requires lots of research and development. This includes the production of new algorithms and the programming of new models. Such specific development of software increases costs. Further, maintenance cost, electricity consumption, cooling cost has to be included in the total expenses at the operational level. therefore, the high costs of exascale computing will hamper the market’s growth.

The proliferation of AI and ML technologies worldwide – AI and ML are on the forefront driving the demand for exascale computing since these models need immense computational capabilities in their training and deployment processes. Growing demand for Artificial Intelligence and machine learning solutions across sectors such as healthcare and finance, automotive and entertainment industries has increased the demands for more capable computing systems to support strongly complex algorithms and data sets. Today’s AI models especially deep learning networks can be largely trained on big data that can be anything to terabytes or even petabytes large. This intensive training procedure prescribes doing millions, if not billions, of calculations, and this is why traditional computing platforms are unsuitable for this purpose. Exascale can accommodate such incredible workloads thus allowing researchers or organizations to create more complex AI models with increased precision and effectiveness. Therefore, the improvements in AI and ML contribute to the increasing demand for exascale computing worldwide.

The regions analyzed for the market include North America, Europe, South America, Asia Pacific, the Middle East, and Africa. North America emerged as the most significant global exascale computing market, with a 38% market revenue share in 2023.

North America is expected to dominate the exascale computing market, mainly due to its strong cluster of research centres, a highly advanced technological foundation, and progressive government funding. High-performance computing (HPC) continues to be an area where the United States has made outstanding contributions that have served as models for the rest of the world. The high concentration of prestigious universities and national laboratories has laid a firm research and development groundwork in the area of the exascale computing. These institutions contain arguably the most potent computational Cluster in the world by today’s standards making it possible for the institutions to do research in different areas such as climate science, material science and biomedical research among others. Moreover, the government and specific funding programs augment the regional market’s growth. The public private partnership and university has played a role in enabling of innovation for both the hardware and software required for exascale computing.

North America Region Exascale Computing Market Share in 2023 - 38%

www.thebrainyinsights.com

Check the geographical analysis of this market by requesting a free sample

The component segment is divided into hardware and software. The hardware segment dominated the market, with a market share of around 57% in 2023. Exascale computing requires advanced, superior special hardware components (processors, memory architectures and specific accelerators). CPUs are primary components in these systems. The importance of proper hardware is felt much more because of the complexity of applications in fields such as artificial intelligence, machine learning, and scientific applications. Since these applications require more computation, the need to meet application requirements force evolution of processors and memory associated with these applications. Furthermore, the decennial improvements made in the domain of the computer hardware affect the global structure and organization of computing systems.

The deployment type segment is divided into on-premises and cloud-based solutions. The on-premises segment dominated the market, with a market share of around 55% in 2023. The on-premises deployment model currently holds the majority of market share in the exascale computing domain, mostly owing to the fact that it offers organizations the tools for managing, customizing, and implementing computing resources for large computational workloads. On-premises model is used where the organization wants to have full control over its key applications and data, this is common in research facilities and government agents. This model enables them to choose both the hardware and the software according to the need of the project they undertake hence enable them to have the best computational environment. Further, on-premises systems can help achieve better response time, and utilization of bandwidth. The on-premises deployment model will remain popular in the future because performance, security, and control of computational resources remain high priorities for organizations in the exascale marketplace and on-premises deployment model enables this.

The application segment is divided into scientific research, artificial intelligence and machine learning, financial services, manufacturing, healthcare, and government and defence. The scientific research segment dominated the market, with a market share of around 34% in 2023. Scientists and engineers in fields like physics, climate, biology, and material science need more modelling and analysis capabilities than conventional architectures can provide. Exascale computing allows researchers to model complex processes in detail. Exascale computing is vital for climate science applications, genomics and drug discovery among others. Furthermore, scientific research work may require the results coming from different institutions and even different countries and therefore entails sharing and analysing huge datasets. Exascale computing underpins such collaborations by offering algorithms and effective tools for the analysis of the data.

The end user segment is divided into academic and research institutions, government organizations, and private sector enterprises. The academic and research institutions segment dominated the market, with a market share of around 43% in 2023. The largest consumer of exascale computing services is the academic and research sector as it is the major controller of scientific development and innovation throughout various fields. These organizations are in the vanguard of scientific work that calls for major computational resources, including advanced computational astrophysics, intricate biological modelling, and, particularly, large-scale data analysis. Moreover, academic institutions relying on exascale systems for large scale/hybrid projects, developments, and multidisciplinary partnerships with government agencies and private industries. It should be noted that collaborations of this type can result in the imposition of major advances in such fields as the climate, renewable energy, and material science. Accompanying these partnerships are exascale computing resources which help the researchers solve big questions that demand vast computing capacity and fresh ideas.

| Attribute | Description |

|---|---|

| Market Size | Revenue (USD Billion) |

| Market size value in 2023 | USD 1.30 Billion |

| Market size value in 2033 | USD 10.30 Billion |

| CAGR (2024 to 2033) | 23% |

| Historical data | 2020-2022 |

| Base Year | 2023 |

| Forecast | 2024-2033 |

| Region | The regions analyzed for the market are Asia Pacific, Europe, South America, North America, and Middle East and Africa. Furthermore, the regions are further analyzed at the country level. |

| Segments | Component, Deployment Type, Application, and End User |

As per The Brainy Insights, the size of the global exascale computing market was valued at USD 1.30 billion in 2023 to USD 10.30 billion by 2033.

Global exascale computing market is growing at a CAGR of 23% during the forecast period 2024-2033.

The market's growth will be influenced by the growing high-intensity computational needs.

The high costs of exascale computing could hamper the market growth.

This study forecasts revenue at global, regional, and country levels from 2020 to 2033. The Brainy Insights has segmented the global exascale computing market based on below mentioned segments:

Global Exascale Computing Market by Component:

Global Exascale Computing Market by Deployment Type:

Global Exascale Computing Market by Application:

Global Exascale Computing Market by End User:

Global Exascale Computing Market by Region:

Research has its special purpose to undertake marketing efficiently. In this competitive scenario, businesses need information across all industry verticals; the information about customer wants, market demand, competition, industry trends, distribution channels etc. This information needs to be updated regularly because businesses operate in a dynamic environment. Our organization, The Brainy Insights incorporates scientific and systematic research procedures in order to get proper market insights and industry analysis for overall business success. The analysis consists of studying the market from a miniscule level wherein we implement statistical tools which helps us in examining the data with accuracy and precision.

Our research reports feature both; quantitative and qualitative aspects for any market. Qualitative information for any market research process are fundamental because they reveal the customer needs and wants, usage and consumption for any product/service related to a specific industry. This in turn aids the marketers/investors in knowing certain perceptions of the customers. Qualitative research can enlighten about the different product concepts and designs along with unique service offering that in turn, helps define marketing problems and generate opportunities. On the other hand, quantitative research engages with the data collection process through interviews, e-mail interactions, surveys and pilot studies. Quantitative aspects for the market research are useful to validate the hypotheses generated during qualitative research method, explore empirical patterns in the data with the help of statistical tools, and finally make the market estimations.

The Brainy Insights offers comprehensive research and analysis, based on a wide assortment of factual insights gained through interviews with CXOs and global experts and secondary data from reliable sources. Our analysts and industry specialist assume vital roles in building up statistical tools and analysis models, which are used to analyse the data and arrive at accurate insights with exceedingly informative research discoveries. The data provided by our organization have proven precious to a diverse range of companies, facilitating them to address issues such as determining which products/services are the most appealing, whether or not customers use the product in the manner anticipated, the purchasing intentions of the market and many others.

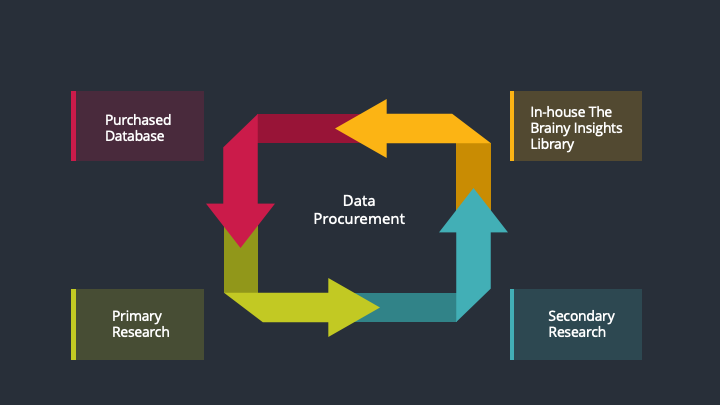

Our research methodology encompasses an idyllic combination of primary and secondary initiatives. Key phases involved in this process are listed below:

The phase involves the gathering and collecting of market data and its related information with the help of different sources & research procedures.

The data procurement stage involves in data gathering and collecting through various data sources.

This stage involves in extensive research. These data sources includes:

Purchased Database: Purchased databases play a crucial role in estimating the market sizes irrespective of the domain. Our purchased database includes:

Primary Research: The Brainy Insights interacts with leading companies and experts of the concerned domain to develop the analyst team’s market understanding and expertise. It improves and substantiates every single data presented in the market reports. Primary research mainly involves in telephonic interviews, E-mail interactions and face-to-face interviews with the raw material providers, manufacturers/producers, distributors, & independent consultants. The interviews that we conduct provides valuable data on market size and industry growth trends prevailing in the market. Our organization also conducts surveys with the various industry experts in order to gain overall insights of the industry/market. For instance, in healthcare industry we conduct surveys with the pharmacists, doctors, surgeons and nurses in order to gain insights and key information of a medical product/device/equipment which the customers are going to usage. Surveys are conducted in the form of questionnaire designed by our own analyst team. Surveys plays an important role in primary research because surveys helps us to identify the key target audiences of the market. Additionally, surveys helps to identify the key target audience engaged with the market. Our survey team conducts the survey by targeting the key audience, thus gaining insights from them. Based on the perspectives of the customers, this information is utilized to formulate market strategies. Moreover, market surveys helps us to understand the current competitive situation of the industry. To be precise, our survey process typically involve with the 360 analysis of the market. This analytical process begins by identifying the prospective customers for a product or service related to the market/industry to obtain data on how a product/service could fit into customers’ lives.

Secondary Research: The secondary data sources includes information published by the on-profit organizations such as World bank, WHO, company fillings, investor presentations, annual reports, national government documents, statistical databases, blogs, articles, white papers and others. From the annual report, we analyse a company’s revenue to understand the key segment and market share of that organization in a particular region. We analyse the company websites and adopt the product mapping technique which is important for deriving the segment revenue. In the product mapping method, we select and categorize the products offered by the companies catering to domain specific market, deduce the product revenue for each of the companies so as to get overall estimation of the market size. We also source data and analyses trends based on information received from supply side and demand side intermediaries in the value chain. The supply side denotes the data gathered from supplier, distributor, wholesaler and the demand side illustrates the data gathered from the end customers for respective market domain.

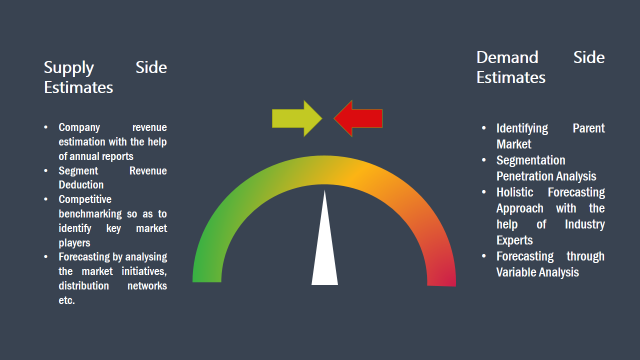

The supply side for a domain specific market is analysed by:

The demand side for the market is estimated through:

In-house Library: Apart from these third-party sources, we have our in-house library of qualitative and quantitative information. Our in-house database includes market data for various industry and domains. These data are updated on regular basis as per the changing market scenario. Our library includes, historic databases, internal audit reports and archives.

Sometimes there are instances where there is no metadata or raw data available for any domain specific market. For those cases, we use our expertise to forecast and estimate the market size in order to generate comprehensive data sets. Our analyst team adopt a robust research technique in order to produce the estimates:

Data Synthesis: This stage involves the analysis & mapping of all the information obtained from the previous step. It also involves in scrutinizing the data for any discrepancy observed while data gathering related to the market. The data is collected with consideration to the heterogeneity of sources. Robust scientific techniques are in place for synthesizing disparate data sets and provide the essential contextual information that can orient market strategies. The Brainy Insights has extensive experience in data synthesis where the data passes through various stages:

Market Deduction & Formulation: The final stage comprises of assigning data points at appropriate market spaces so as to deduce feasible conclusions. Analyst perspective & subject matter expert based holistic form of market sizing coupled with industry analysis also plays a crucial role in this stage.

This stage involves in finalization of the market size and numbers that we have collected from data integration step. With data interpolation, it is made sure that there is no gap in the market data. Successful trend analysis is done by our analysts using extrapolation techniques, which provide the best possible forecasts for the market.

Data Validation & Market Feedback: Validation is the most important step in the process. Validation & re-validation via an intricately designed process helps us finalize data-points to be used for final calculations.

The Brainy Insights interacts with leading companies and experts of the concerned domain to develop the analyst team’s market understanding and expertise. It improves and substantiates every single data presented in the market reports. The data validation interview and discussion panels are typically composed of the most experienced industry members. The participants include, however, are not limited to:

Moreover, we always validate our data and findings through primary respondents from all the major regions we are working on.

Free Customization

Fortune 500 Clients

Free Yearly Update On Purchase Of Multi/Corporate License

Companies Served Till Date